Self-Supervised Learning for Facial Action Unit Recognition through Temporal Consistency (BMVC2020 Accepted)

This repository contains PyTorch implementation of Self-Supervised Learning for Facial Action Unit Recognition through Temporal Consistency

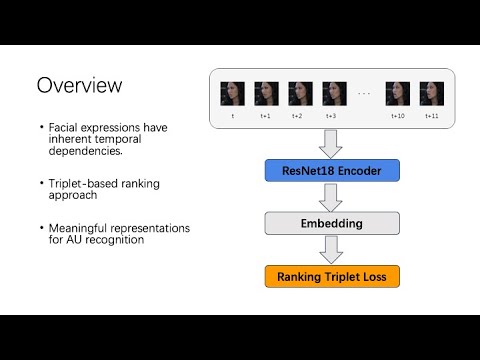

Proposed parallel encoders network takes a sequence of frames extracted from a video. The anchor frame is selected at time t, the sibling frame at t + 1, and the following frames at equal intervals from t +1+k to t +1+Nk. All input frames are fed to ResNet-18 encoders with shared weights, followed by a fully-connected layer to generate 256d embeddings. L2-norm is applied on output embeddings. We then compute triplet losses for adjacent frame pairs along with the fixed anchor frame. In each adjacent pair, the preceding frame is the positive sample and the following frame is the negative sample. Finally, all triplet losses are added to form the ranking triplet loss.

Pretrained weights could be downloaded from here